Behind WhatsApp News Groups During the Latest War on Lebanon

In times of war and amidst crises, social media platforms turn into battlegrounds where information clashes and truth is sometimes extinguished.

In the fall of 2024, the Israeli enemy launched a war on Lebanon, marked by intense and brutal bombardment that targeted various Lebanese regions. This resulted in martyrs, injuries, and psychological damage among the population due to anxiety and the psychological warfare that accompanied the enemy’s firepower.

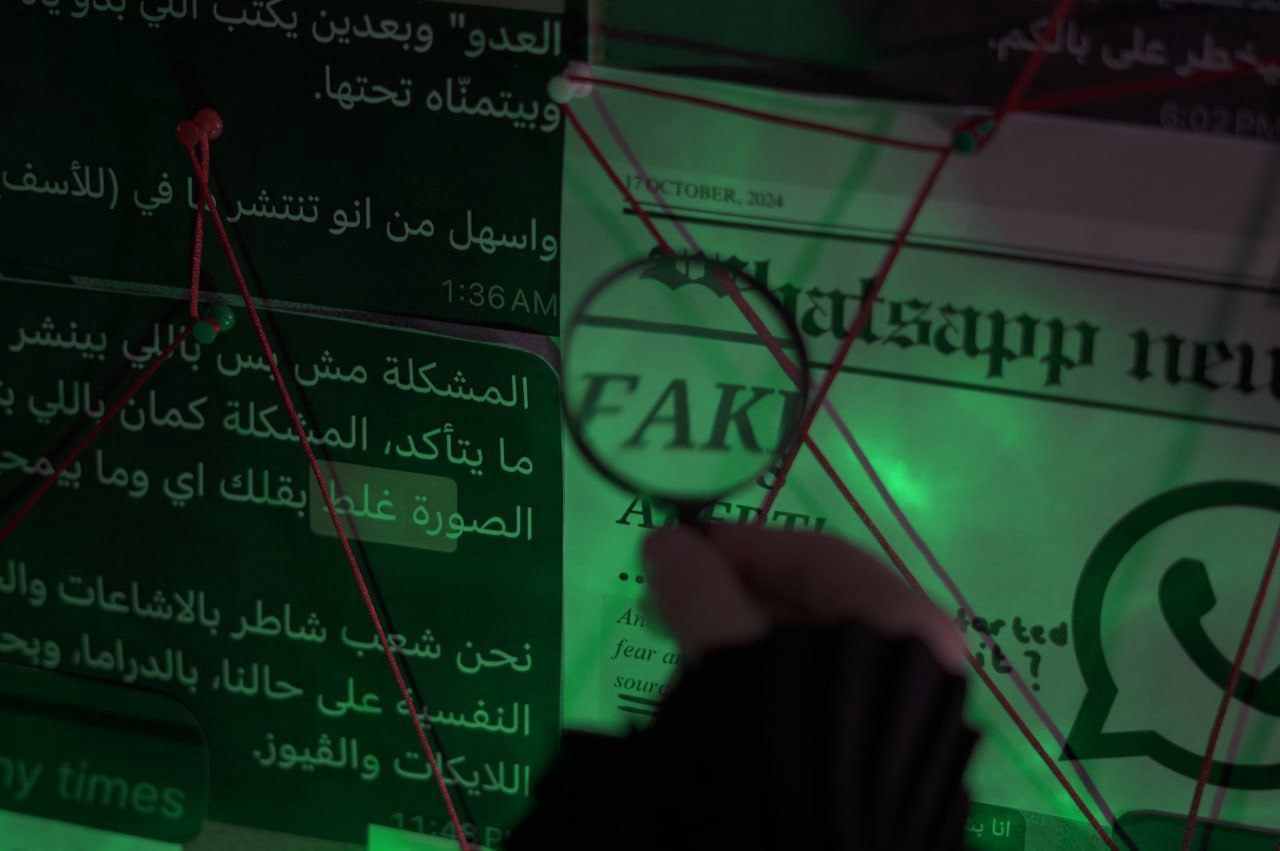

Amidst this crisis, the WhatsApp application shifted from a daily communication tool to a primary source for receiving news, as thousands of Lebanese turned to it to follow developments moment by moment.

However, in the absence of oversight and under mounting psychological pressure, WhatsApp transformed from a source of information into a tool of misinformation. It opened the door for the rapid spread of misleading content within news groups, without any monitoring or accountability.

During the recent war on Lebanon in 2024, WhatsApp became one of the main tools people used to share news. This is because it’s easy to use, spreads messages quickly, and offers privacy. But unfortunately, these same features also made WhatsApp a good place for false information to spread — a space where fake news can circulate without any control or fact-checking.

When people talk about fake news on social media, they often forget about WhatsApp or don’t take it seriously. But if we look closely, we’ll see that on apps like Instagram, Facebook, and Twitter, we usually choose to look for news. On WhatsApp, it’s different. The news doesn’t wait for you to find it — it comes straight to you. It pops up in your notifications and enters your day without asking.

Wherever you go, it’s there — in the family group, the friends group, or the “news” groups.

News on WhatsApp doesn’t whisper — it shouts: “Here I am! Read me! Believe me — everyone is sharing me, even your relatives and the people you trust!”

War is a fertile ground for the spread of false information.

Dr. Shireen Zebib, who holds a PhD in Media and Communication, explains that fake news tends to increase during wars — and that the first victim in any war is the truth. This happens because it becomes very hard for the public to clearly see what’s actually going on. Most of the time, only part of the story is shown, or the stories people hear are inaccurate — and sometimes completely false.

Verification and fact-checking are a duty, not a choice

In this situation, the media plays a key role. It often becomes part of the conflict itself, used as a tool in the war to push certain narratives, support specific sides, and influence public opinion.

This isn’t something new. It’s a pattern we’ve seen in almost every major war — from World War I and II to today’s modern conflicts.

Dr. Zbib believes this is due to several key reasons — the biggest one being the fast spread of information made possible by huge advances in technology. This has led to a noticeable rise in fake news.

First, because we now have more space and more platforms to share information than ever before.

Second, the lack of trust between people and big, official media outlets has pushed many to turn to alternative sources — including apps like WhatsApp — to get their news.

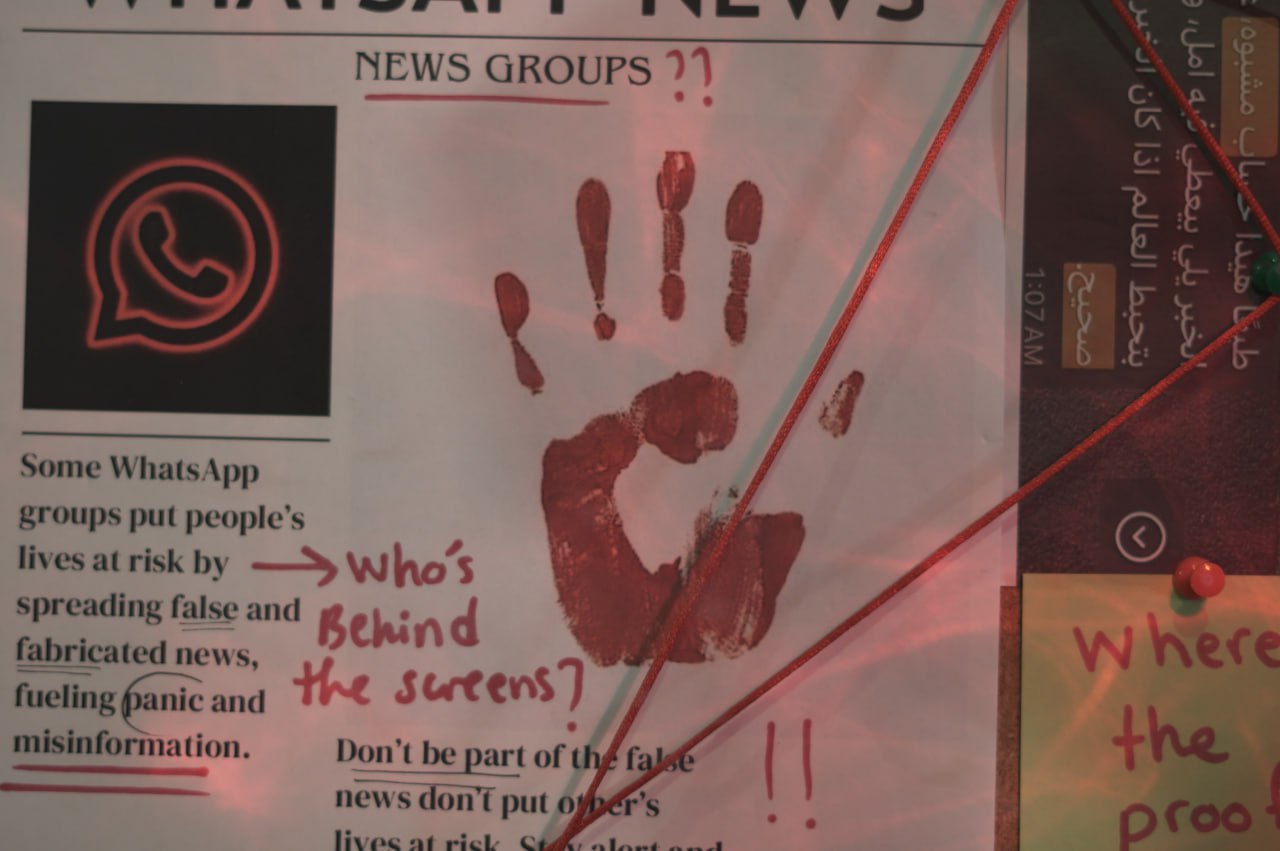

Suspicious groups are a real threat

Zbib points out that relying on WhatsApp doesn’t mean it’s more trustworthy than traditional media. Instead, it reflects a general feeling of worry and lack of trust. Platforms like WhatsApp and Telegram don’t have proper systems for monitoring or filtering content. Anyone can write and share anything they want, without any rules — and that makes these platforms perfect for spreading inaccurate information, especially during wars, when false and misleading news increases sharply.

A video by Dr. Hussein Hazimeh also highlights the main reason why WhatsApp is so vulnerable to fake content: its nature as an instant messaging app. Unlike platforms like Facebook or Instagram, WhatsApp lacks the control tools and content moderation features they have. The video also explains the key differences between these types of platforms, and how that affects the speed and ease with which unreliable or inappropriate content can spread.

In places that are constantly going through crises, sharing news isn’t just a habit — it’s a daily necessity, driven by the urgent need to know what’s going on.

This video by Dr. Shireen Zbib highlights how heavily WhatsApp is used to share news, especially in communities that are unstable or coming out of conflict or war. It also touches on the idea of “social trust” — where information is seen as reliable simply because it comes from a friend or a family member.

To understand how dangerous online content can be, it’s important to know the different types of false information. These definitions come from UNESCO (the United Nations Educational, Scientific and Cultural Organization):

Misinformation:

False or incorrect information that is shared by someone who believes it’s true. There’s no intention to mislead — it’s just wrong.

Disinformation:

False information that is created and shared on purpose to cause harm or mislead others.

Malinformation:

Information that is based on real facts, but is shared with the intent to harm someone — whether it’s a person, an organization, or even a country.

Deepfake:

The use of artificial intelligence, especially deep learning, to create or edit videos or audio. It can make it look or sound like a real person is saying or doing something they never actually did.

When Fake News Spreads on WhatsApp During War

During the war, some WhatsApp groups started spreading false information — sometimes on purpose, sometimes by mistake. Fake news was shared for different reasons: to create panic, get attention, or simply out of a rush to be the first to share something. These groups played a harmful role by increasing fear and anxiety, especially in the absence of proper monitoring or fact-checking before messages were forwarded.

WhatsApp and its news groups became a kind of media compass for some people during the war. After all, how easy is it to get “news” handed to you on a silver platter — all in one message, even if it’s full of lies and misleading claims?

And how hard is it, for many, to simply pause, check the facts, and ask: Is this really true, or are we heading in the wrong direction?.

From the sender’s side, the main goals are often:

– Seeking fame

– Making money

– Supporting certain political agendas or conflicts

From the receiver’s side, people may help spread this content because of:

– Supporting personal beliefs or pre-existing opinions

– Wanting to spread hope or positivity (even if it’s false)

– Trying to create fear or panic

WhatsApp groups… where the line between news and noise gets blurred.

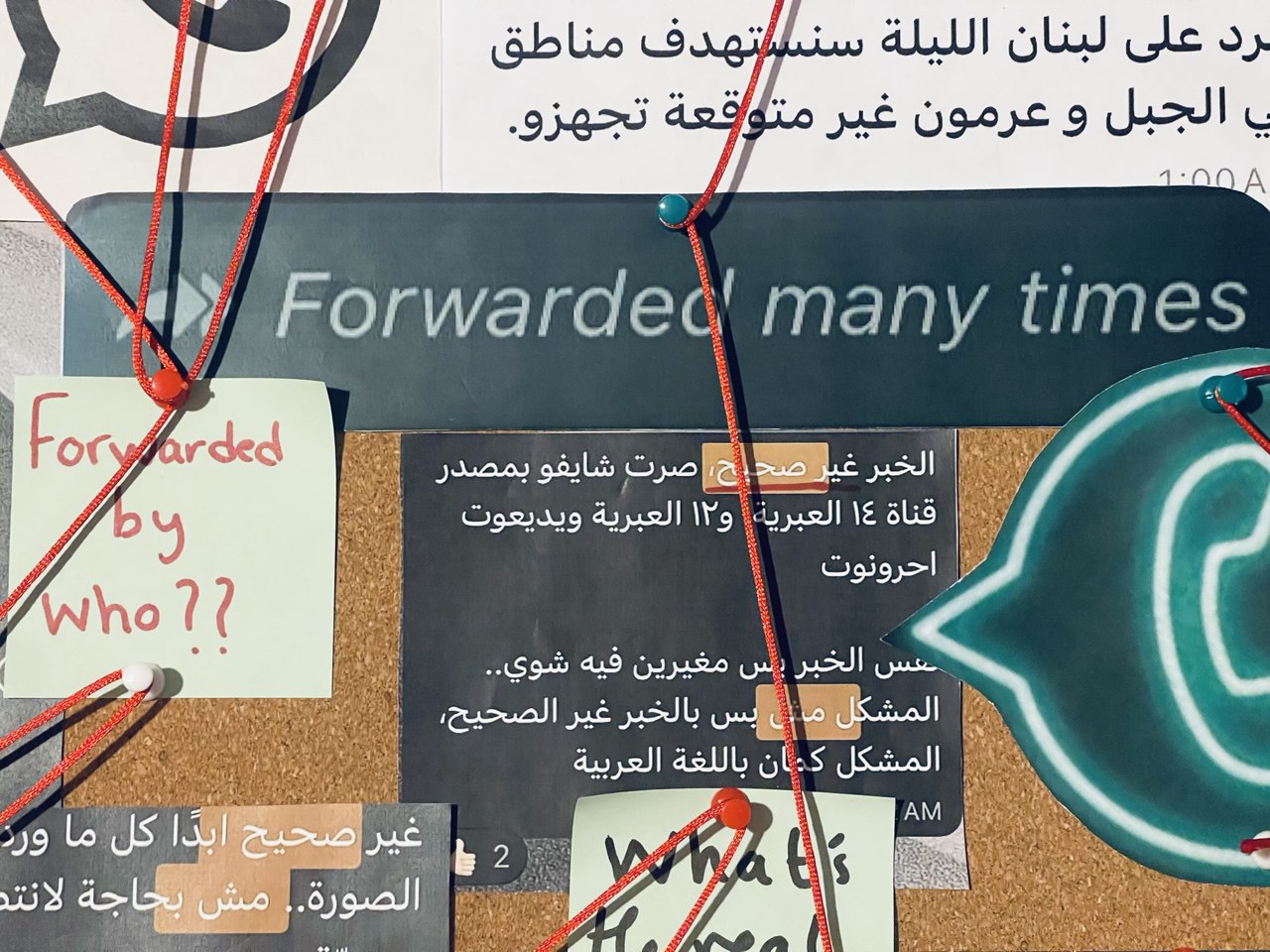

While some so-called “news” groups on WhatsApp became known for spreading false or misleading information — whether intentionally or not — there were also clear efforts by other groups to raise media awareness, correct fake news, and highlight the importance of critical thinking when receiving information.

One example is the “Resilient Community” group, run by journalist Ahmad Sarhan, an editor and writer at Al Mayadeen TV. This group stood out as a strong initiative during the war.

Throughout the conflict, Sarhan focused on fact-checking and debunking fake stories. What made his approach different was that he didn’t just reply with a simple “This is false” — he went further by giving detailed, logical explanations, providing evidence, and helping followers understand why something was fake. His goal was not just to inform, but to strengthen people’s ability to think critically.

Amid all the information chaos and growing misinformation, Sarhan felt a different kind of responsibility. Drawing from his background as a journalism student, he created a WhatsApp group designed to offer reliable, analytical content — not just headlines, but deeper insights that explain the news and uncover what’s behind it.

Resilient Community WhatsApp Group

In the video, Serhan shares how what started as a personal initiative became a space that promotes awareness and helps build an audience that thinks, questions, and analyzes — rather than blindly sharing whatever comes their way.

With the rapid rise of digital media, the concept of citizen journalism — where ordinary people can report news and document events using their smartphones and social media — has become more common. While this trend has added a new dimension to news-sharing, allowing for faster coverage and perspectives that traditional media might miss, it also comes with serious concerns.

In many cases, citizen journalism becomes a main source of rumors and fake news — especially on closed platforms like WhatsApp groups, which lack oversight and professional fact-checking.

In today’s world of information chaos, creating a “news” group on WhatsApp doesn’t require any journalism training or experience.

In this video, journalist Ahmad Sarhan criticizes the growing trend of non-experts starting news groups and highlights the risks of spreading unverified information — especially during times of war and fear.

Because not everything you hear should be shared — and not everyone who creates a “group” is a trusted source.

Digital Transformation in Media: WhatsApp Channels as a New Way to Reach the Public

With the rapid pace of digital change, media organizations are constantly looking for new ways to stay connected with their audience. This has led many outlets in recent years to launch their own WhatsApp Channels.

Dr. Zbib explains that the main idea behind media organizations joining WhatsApp is the growing competition in the news space — major outlets are no longer the only players. Today, many people around the world use WhatsApp not just for personal chats, but also as a primary source of news.

But why is that?

Because nowadays, people don’t want to search for news themselves. They don’t want to visit websites or scroll through traditional news platforms. Instead, they prefer to receive updates directly and instantly on their phones.

Zbib stresses that it’s now essential for media organizations to have a strong presence on WhatsApp. They need to meet their audience where they already are — and today, that place is WhatsApp. If these organizations don’t follow their audience, they risk losing them altogether.

Al Jadeed Channel Takes on a Fresh Look Through WhatsApp Channels

Critical Thinking

Mahdi Dirani, a journalist and fact-checker at Al Mayadeen TV, emphasizes the importance of critical thinking when dealing with information.

He explains that no matter how many tools exist to help us detect false or misleading content, the most important thing starts within the person: self-criticism.

Our own eyes — and minds — are some of the strongest tools we have to spot misinformation. Thinking and analyzing are essential, and we should rely on them before turning to external tools.

We need to question what we read, challenge what we see, and always ask ourselves:

Is this information actually true?

Could it be false or misleading to serve a certain narrative or agenda?

This kind of mindset should always guide how we process information.

Journalist Ahmad Serhan explains that during the war, many headlines spread claiming to be from sources like "Israeli media" or specifically "Yedioth Ahronoth" and other Hebrew outlets. Unfortunately, fabricating these kinds of headlines is easy—and they’re often used to spread fear and panic among the public.

Serhan points out that people often unknowingly take part in the very psychological warfare targeting them. For example, someone might receive a message saying:

“Netanyahu is planning to bomb Beirut today and turn it into hell.”

Out of concern, they forward it to their family group on WhatsApp and ask, “Is this true?”

Let’s assume they mean well and genuinely want to verify the information.

But here’s what usually happens next:

The message gets forwarded from the family group to friends, and from there to even more people. Very quickly, it turns into a widely believed "fact":

“Netanyahu will turn Beirut into hell, according to Channel 13 (Israeli media).”

And it’s so easy to add that phrase — “Channel 13 (Israeli)” — while typing.

Anyone can make up a fake story and falsely attribute it to an official-sounding source, like:

“Channel 13: Netanyahu threatens from Haifa beach.”

That’s why it's critical for people to be aware.

Just as it’s easy to write the words “Channel 13,” it’s just as easy to check the official website or Twitter account of the actual news channel to confirm if the story is real — before spreading it.

"Typing 'Channel 13' on the keyboard is easy"

As journalist Ahmad Sarhan puts it in his own words:

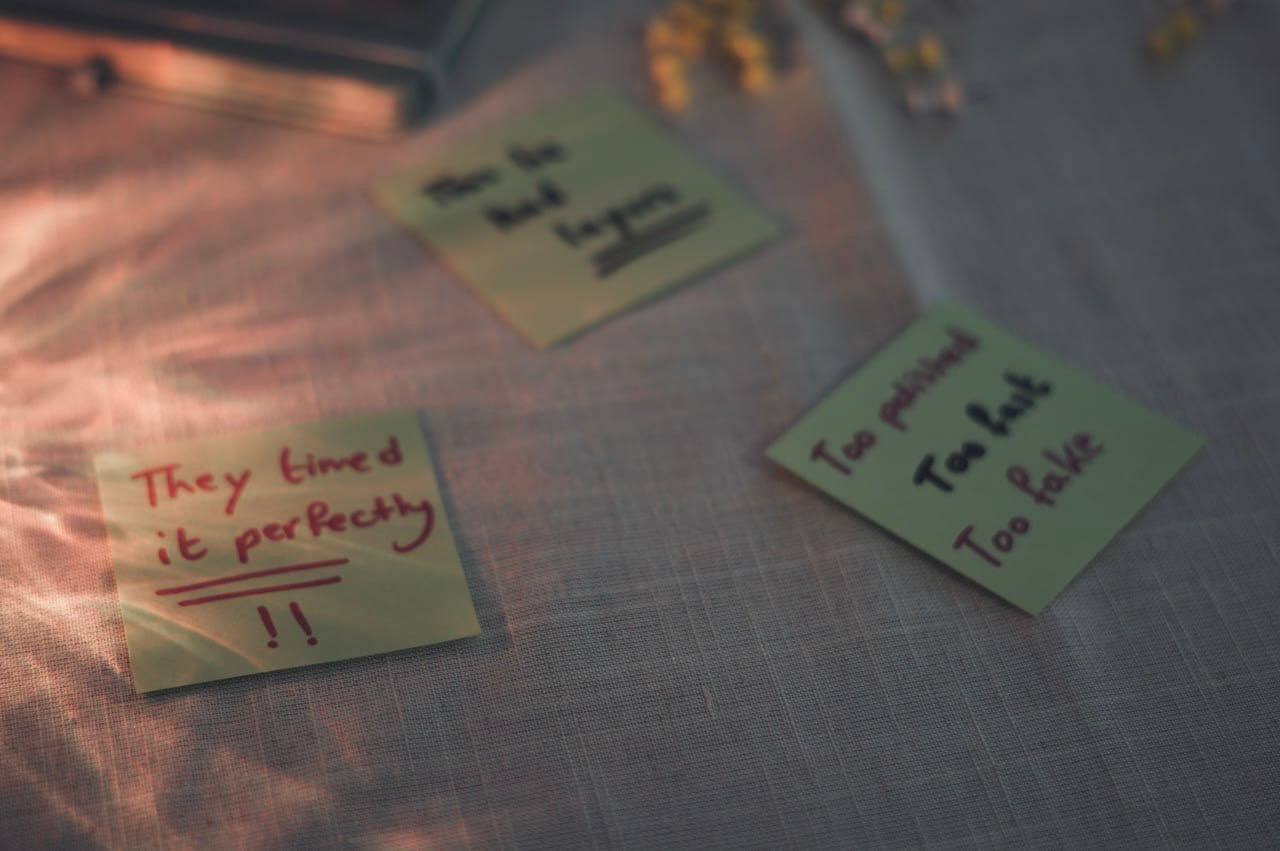

"There are warning bells that should make us pause and think when we see any piece of news—before we believe it or share it."

One of the simplest and clearest warning signs is spelling mistakes.

If you come across a message claiming to be from an official source but it contains spelling or grammar errors, that should raise a big red flag.

Trusted, professional sources almost never publish content with basic language mistakes—unless in rare or unusual circumstances. So when you spot an error, stop and ask:

“Would a real news agency write it like this?”

Sometimes, that small doubt is all you need to avoid spreading a lie.

Second Warning Sign: "Forwarded Many Times"

When you see the phrase "forwarded many times" on a WhatsApp message, it means the message has been shared a lot and spread widely.

This doesn't automatically mean the news is false — it might be true — but it's not a reliable sign either. In many cases, false or misleading news carries this label because it spreads fast when it's emotional, dramatic, or controversial.

جرس الإنذار الثاني: forwarded many times

Third Warning Bell: When there's no clear source and the message just says something like “according to special sources” without naming an official person or organization, that’s a reason to pause and question where the news came from.

Fourth Warning Bell: The use of dramatic words like “disaster” or “beware!!!” has become common to grab attention, especially when combined with emojis like rockets or red dots. These tricks are often used to catch your eye more than to share real or accurate information.

Fifth Warning Bell: Illogical news.

Logic is key — and using your “logic button” when reading any news is essential. If the story doesn’t make sense, that’s a clear warning sign that it might be false.

Serhan adds that there are no fixed rules. Sometimes, a piece of news might be true even if it comes with the word “breaking,” emojis, or even a spelling mistake. But these methods were often used during the war and were commonly seen alongside false information — so they can still be a red flag that makes us pause and double-check.

The blue mark is not a sign of trust

The blue checkmark is no longer a reliable sign of credibility. Today, anyone who pays for a subscription can get it — it doesn’t necessarily mean the account is official or trustworthy.

In this video, journalist Ahmad Sarhan highlights the danger of blindly trusting verified accounts. He explains how important it is to cross-check news across multiple sources to avoid falling into the trap of misinformation.

Excitement During War

Sarhan explains that during war, some people — out of excitement — may create fake victories for the resistance, like when news spread about captured Israeli soldiers. But when it turns out these stories aren’t true, those who believed them without checking often feel disappointed, and sometimes even hopeless.

He adds, “Some think they’re boosting morale this way — but in reality, they do the opposite when the truth comes out. The resistance never needed fake victories to begin with.”

Don’t fall into the trap of false hopeful news

He also adds that some news outlets, through their social media accounts, preferred to use exaggerated or dramatic headlines instead of presenting the full, accurate story. The goal was often to make money and gain more views, rather than share the truth.

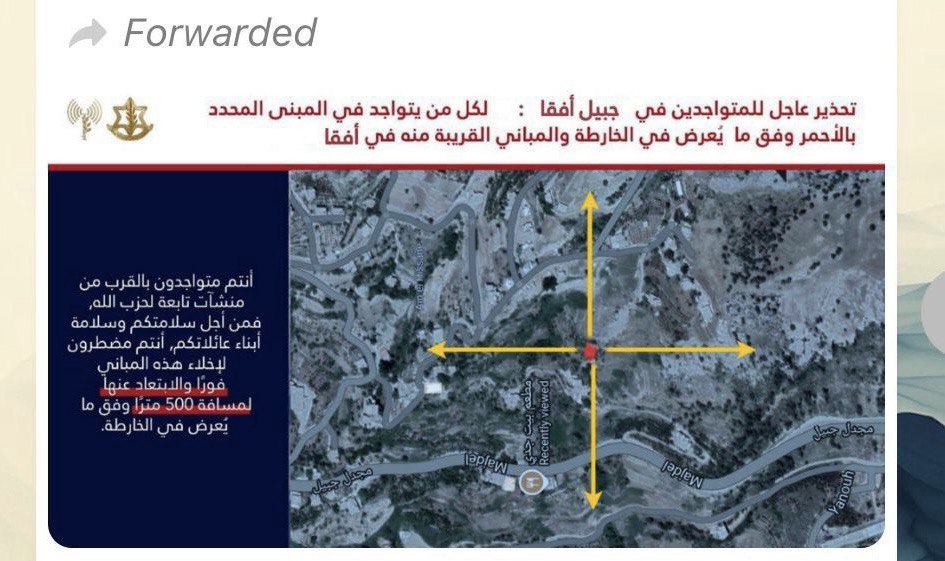

Serhan adds, It’s important to highlight something very serious regarding Avichay Adraee. Yes — we were forced to follow him. We had no choice but to keep checking his page regularly, because he was posting critical war-related alerts. And for our safety, we needed to stay informed.

But at the same time, we found ourselves compelled to follow a figure who represents the enemy. And that’s not without consequences — there may be hidden motives behind this kind of exposure, which could become clearer later on.

For example, Avichay previously shared misleading information, like a report allegedly issued by Sahel Hospital, which later turned out to be outdated or inaccurate. And we’ve seen similar tactics before — spreading false claims about hospitals or other locations, like the reports about facilities in Ouzai from previous years.

This way, people found themselves, often without realizing it, believing Avichay — and worse, believing the enemy’s narrative.

The real problem is that many haven’t done enough to develop their media awareness or protect themselves from misinformation. It’s time we finally wake up.,

Dirani points out that during times of war, harmful information often spreads, built on partial truths. For example, photos might show the airport packed with people — which is partly true — but the rest of the narrative aims to damage reputations and distort the bigger picture, increasing fear and panic in Lebanon.

There’s also completely false or misleading information being shared, like video clips claimed to be from certain events, when in fact they’re old videos being recirculated. Sometimes, this isn’t done with bad intentions, but simply because there’s no journalist or camera crew on the ground — so people resort to using archive footage without making that clear. These videos then spread quickly, even if there was no intent to mislead.

On the other hand, the Israeli side deliberately spreads harmful content meant to smear and manipulate. They often use real videos to make their narrative seem more credible. Avichay Adraee was part of this propaganda effort.

Voice notes (or “voice messages”) on WhatsApp have also become a powerful tool for shaping public opinion, especially during times of war. They’re often used to share unverified information in a way that sounds real — giving it a false sense of credibility and making it easier to spread among users.

In this context, Dr. Sherine Zbib points to three main strategies commonly used in voice notes to spread misinformation:

Dr. Zbib points out that there are a set of questions we should ask ourselves whenever we come across any news. These questions can help us avoid falling into the trap of fake or misleading information.

What?

What is the content of the news?

Start by carefully understanding the news before forming any opinion about it.

Who and Where?

Who wrote or shared the news? What’s the source?

Can I find the same news reported somewhere else?

If only one source is talking about it, but multiple trusted media outlets are covering the same topic, then it's more likely to be reliable.

When?

Is the news recent or outdated?

Very often, old news is reshared as if it's something new — and that can be very misleading.

Why?

Why was this news published? What’s the purpose behind it?

Is there an agenda or a hidden motive? Thinking about the intent is essential.

How?

How is the news presented? Is there exaggeration?

The tone and style can reveal a lot. Sensational language is often a red flag.

These are the questions we should ask before doing anything — even before believing the news, and definitely before sharing it.

Too often, we read something and rush to share it without checking, and that’s where the real problem begins.

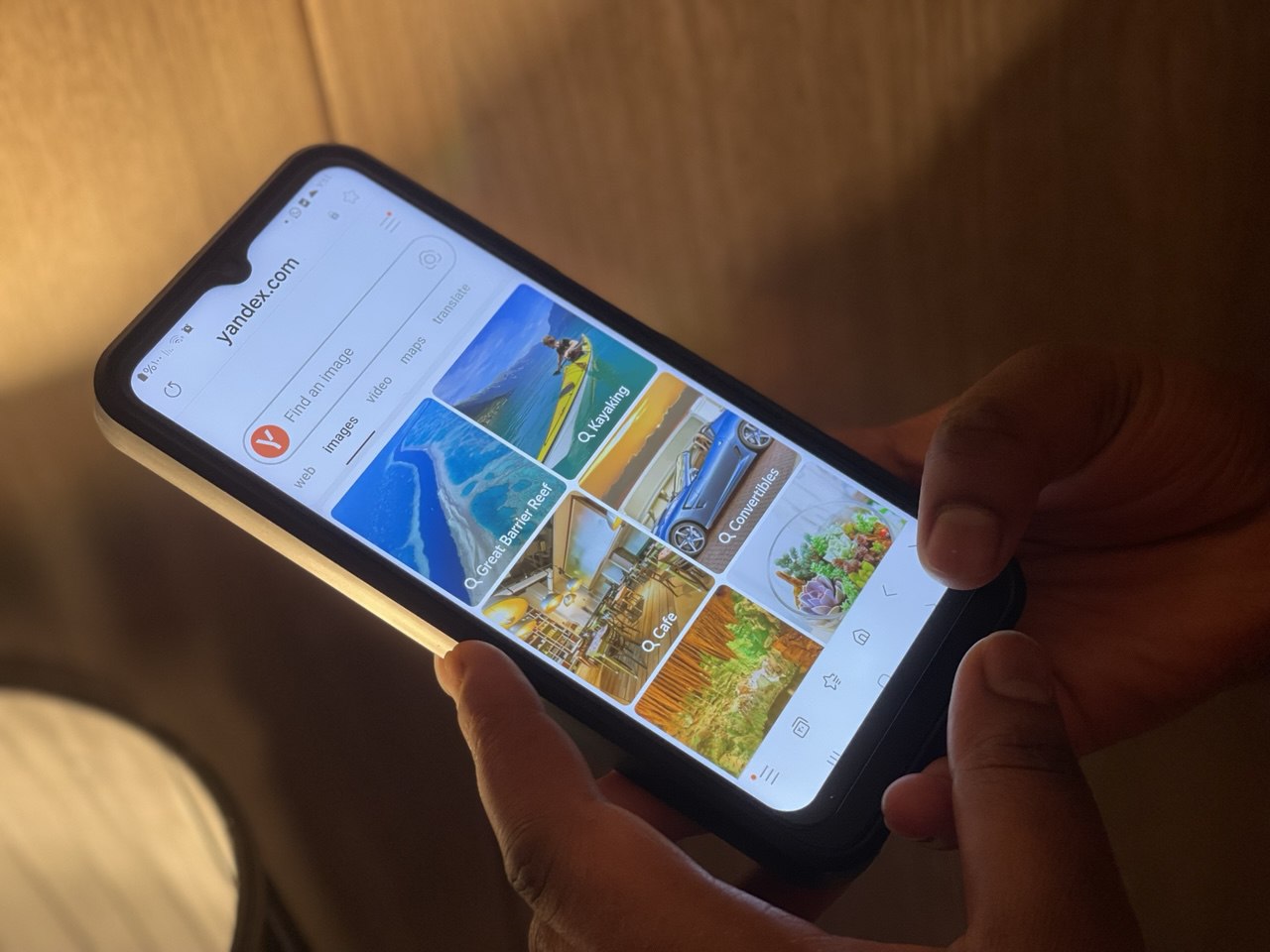

Image Analysis and Reverse Search Tools

When we have an image, we can use reverse image search to find out when and where it was first published. This process helps trace the original source of the image and can reveal who may have started spreading misinformation.

One advanced reverse search tool is TinEye, which allows us to see who first published the image, the website it appeared on, and the headline under which it was used.

Advanced Image Search Tools

Advanced image search allows us to narrow down the date and time the image was posted — even down to the year or hour. This is crucial for getting the most accurate and up-to-date results.

Search engines like Google Images are also essential for tracking images and videos. The process often begins by taking a screenshot of the image, and then tracing it through these tools.

Another powerful search engine is Yandex, a Russian platform similar to Google Images in terms of functionality.

It’s worth noting that some countries have their own local search engines for national security and information control. For example, China relies on Beidou, while Russia uses Yandex to monitor and manage the flow of information within their borders.

Yandex: The Russian Search Engine That Excels in Advanced Image Verification

Video Analysis Tools

One powerful tool for verifying videos is InVID. It allows us to break down a video into individual frames, so each frame can be analyzed separately. This helps us pinpoint where any potential manipulation or misleading content may exist within the video.

For example, in some videos, we might notice a distinctive building. If we know that this building doesn’t exist in a country like Lebanon — based on its known architecture — we can then search for where that building does exist in other countries. By comparing it with satellite images or real photos, we can identify the video’s true origin

Dirani notes that certain parties have started applying pressure to undermine fact-checkers and limit their impact. He adds that some Western institutions had previously provided grants and funding to support fact-checking efforts. However, with the arrival of the new U.S. administration, this support was cut off, leading to reduced backing for these initiatives and a noticeable decline in funding.

Media Is More Than Just a News Channel

Media isn’t just a tool for delivering news — it’s a battleground that deeply affects our lives, our security, and our stability.

In this context, journalist Ahmad Sarhan shares an important message urging us to recognize the serious risks we face today. He emphasizes the importance of distinguishing between reliable sources and misleading ones, and highlights the role each of us plays in raising awareness among those around us — especially in such a sensitive and critical environment.

The Greatest Responsibility Today Falls on Individuals

The biggest bet today is on individuals — on each of us — to take responsibility for how we consume and share media.

We must ask questions, doubt, and verify.

The future of media literacy starts with us: with our daily choices, with each click of “forward,” and whether we make that choice consciously or not.

Perhaps this war has served as a wake-up call for all of us — a reminder that we must verify every piece of news once, twice, even three times, even if it comes from a so-called "trusted source."

Verification is a duty, not a choice.

Let our mission — today and in the future — be to protect ourselves and our communities from false information.